MODERATION SOLUTION

One unmoderated post is enough to trigger a crisis.

Bodyguard is a hybrid moderation solution that detects and blocks risky content in real time, across all your channels, before it escalates.

The moderation solution trusted by teams who can’t afford mistakes.

Why choose Bodyguard as your moderation solution?

Moderation means automating the repetitive work that slows teams down: detection, triage, responses, and reporting.

Beyond saving time, Bodyguard helps protect your brand and deliver safer, more engaging online experiences.

Stop moderating manually

Spend less time sorting toxic comments and spam.

Bodyguard automates detection, triage, and alerts so your team stays focused on what matters.

Stop bad buzz before it starts

Instantly detect and block hate speech, harassment, and fraud across all your channels.

Your rules stay consistent, even when volume spikes.

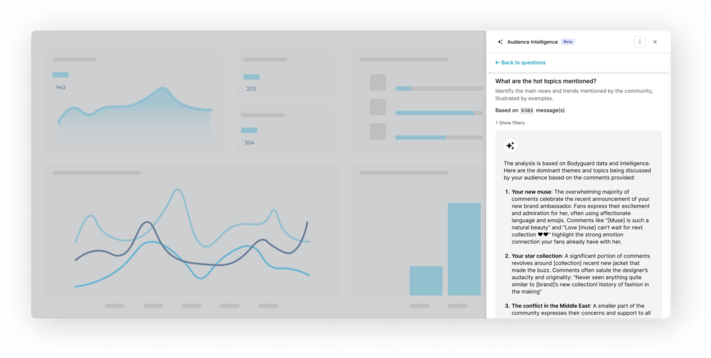

Make your comments useful (and actionable)

Turn healthy conversations into insights and better engagement.

Result: safer threads, higher-quality interactions, and a stronger, better-protected brand.

An all-in-one moderation solution, built for and with marketers

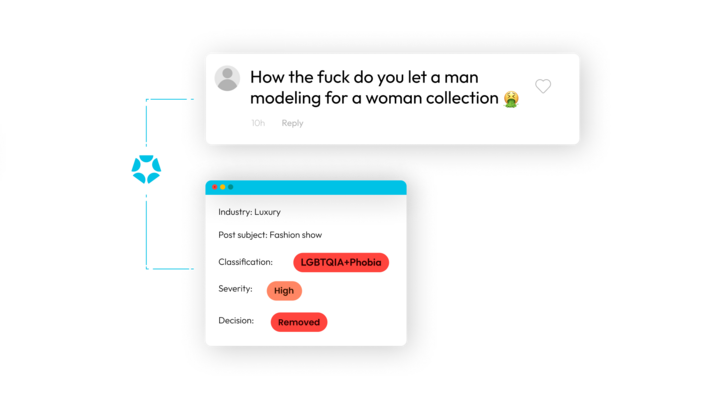

Classic filters miss the context. We moderate intent.

Bodyguard detects and neutralizes risky content in real time, without slowing your teams down. You keep conversations healthy, even when volume spikes.

Ultra-precise hybrid moderation: AI + rules + human oversight to cut errors and stay in control.

Advanced contextual detection: understands intent (irony, slang, humor) to reduce false positives.

45+ languages covered: protect global communities while respecting local nuances.

Continuously updated models: enriched by our linguists to keep up with new forms of toxicity.

Take back control, starting here.

Bodyguard’s moderation solution helps exposed teams regain control of their online spaces.

Turn moderation into a driver of safety, trust, and performance.

Connect Bodyguard to every online space (even the most sensitive)

Respond faster to risks by connecting Bodyguard to your platforms to centralize critical signals and trigger the right actions at the right time.

Improve team coordination through smooth integrations with your existing social channels. Everyone works from the same source of truth, with full context and no silos.

Save time every day by automating moderation and alerts. Your teams focus on decision-making and community engagement, not manual content triage.

Discover how Bodyguard can support your teams

They say it better than we do

Discover why leading industry players trust our solution.

Take back control, starting here.

Bodyguard’s moderation solution helps exposed teams regain control of their online spaces.

Turn moderation into a driver of safety, trust, and performance.

© 2025 Bodyguard.ai — All rights reserved worldwide.